|

I am an incoming phd student at Harvard John A. Paulson School of Engineering and Applied Sciences advised by Prof. Na Li. Prior to Harvard, I received my Master degree from Tsinghua University in 2022. I was in the Intelligent Driving Lab (iDLab) at School of Vehicle and Mobility, Tsinghua University. My advisors were Prof. Shengbo Eben Li and Prof. Sifa Zheng. I also collaborated closely with Prof. Jianyu Chen, who leads Intellegent System and Robotics Lab at IIIS, Tsinghua University. In summer 2021, I had a great time working (remotely) with Prof. Changliu Liu, who leads the Intelligent Control Lab at the Robotics Institute, Carnegie Mellon University. I received my Bachelor degree from Tsinghua University in 2019.

Email /

CV /

Google Scholar /

Github

|

|

|

06/2022: One paper was nominated the Best Paper Award Finalists at L4DC 2022. Congratulations to all coauthors! 05/2022: One paper was accepted at ICML 2022. Congratulations to all coauthors! 03/2022: One paper was accepted for oral presentation at L4DC 2022. Congratulations to all coauthors! 10/2021: I was awarded the National Scholarship as the only recipient out of over 100 master's students in my department. 09/2021: One paper was awarded the Best Student Paper Award at ITSC'21. |

|

I'm interested in safe control, reinforcement learning, safety certificates, with applications to autonomous driving and robotics. Much of my research is about learning zero-violation safe policy using constrained reinforcement learning. (* for equal contribution) |

|

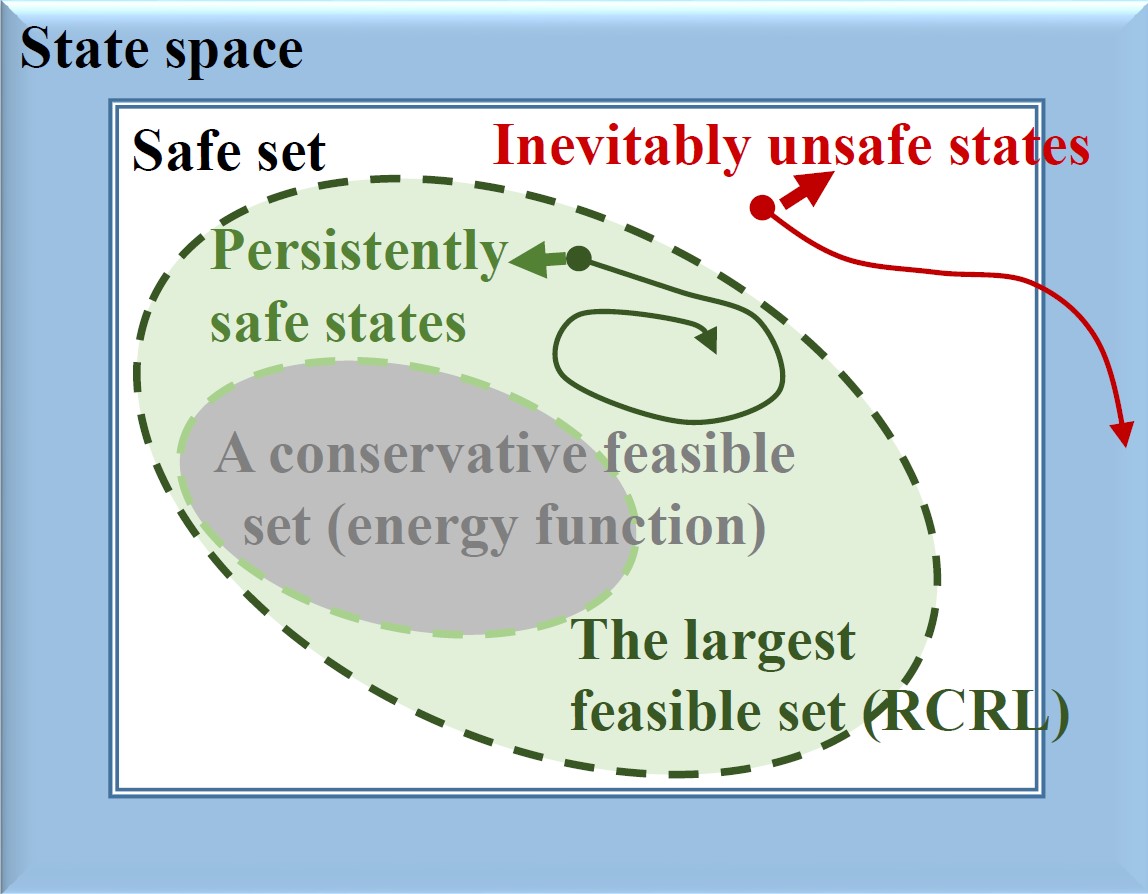

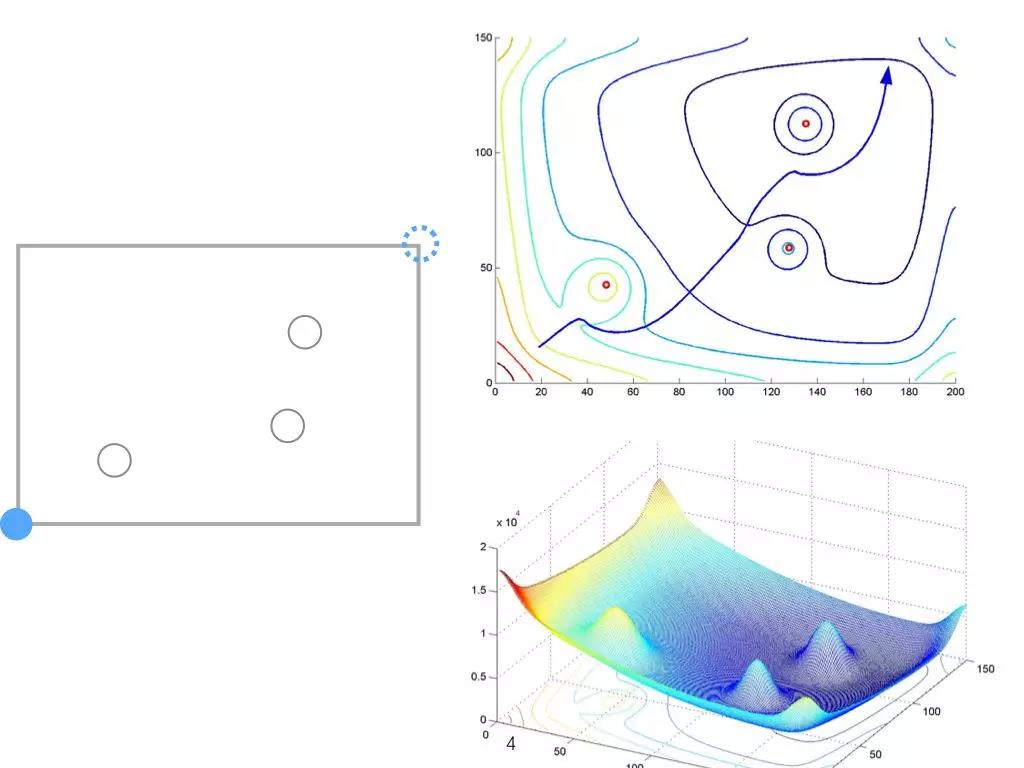

Dongjie Yu*, Haitong Ma*, Shengbo Eben Li, Jianyu Chen ICML (to appear), 2022 arXiv / Code / Env We use reachability analysis to identify feasible sets and learn optimal safe policies using constrained RL. |

|

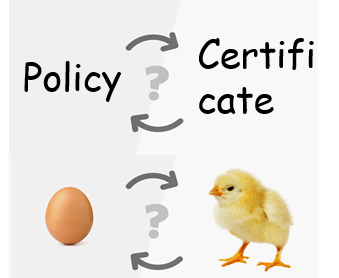

Haitong Ma, Chanlgiu Liu, Shengbo Eben Li, Sifa Zheng, Jianyu Chen L4DC, 2022 (Oral Presentation)(Best Paper Award Finalists) Also presented at Safe Control and Learning under Uncertainty Workshop, MECC, 2021. arXiv / workshop slides / Pre-recorded Video We use adversarial optimization to jointly learn safety certificates and safe control policies in a purely model-free manner. |

|

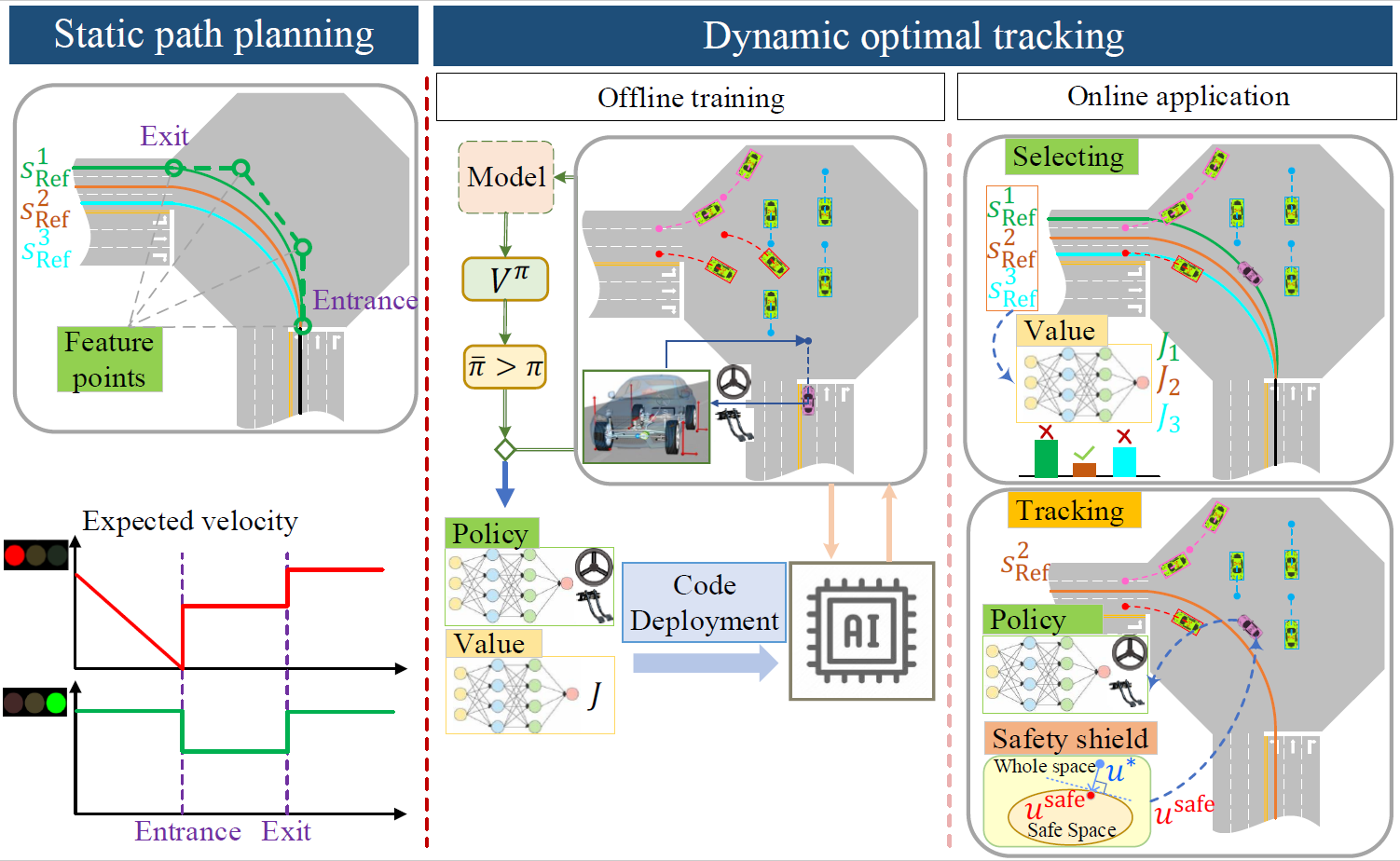

Yang Guan*, Yangang Ren*, Qi Sun, Shengbo Eben Li, Haitong Ma, Jingliang Duan, Yifan Dai, Bo Cheng IEEE Transactions on Cybernetics (early access), 2022 Paper / Video / Code A high-efficiency learning-based decision and control framework for autonomous vehicles. |

|

Haitong Ma, Jianyu Chen, Shengbo Eben Li, Ziyu Lin, Yang Guan, Yangang Ren, Sifa Zheng IROS, 2021 Code We generalize the control barrier functions (CBF) and use it to enhance safety of model-based reinforcement learning, especially for systems whose constraints' first-order derivatives are irrelevant with the input. |

|

|

Yang Guan*, Yangang Ren*, Haitong Ma, Jianyu Chen, Shengbo Eben Li, Qi Sun, Yifan Dai, Bo Cheng IEEE International Intelligent Transportation Systems Conference (ITSC), 2021 (Best Student Paper Award) Video We train a model-based safe RL controller and deploy it on a real autonomous vehicle. |

|

Haitong Ma, Yang Guan, Shengbo Eben Li, Xiangteng Zhang, Sifa Zheng, Jianyu Chen arXiv, 2021 We study safe reinforcement learning with state constraints. A Lagrange multiplier network is utilized to guarantee state-wise constraint satisfaction. |

|

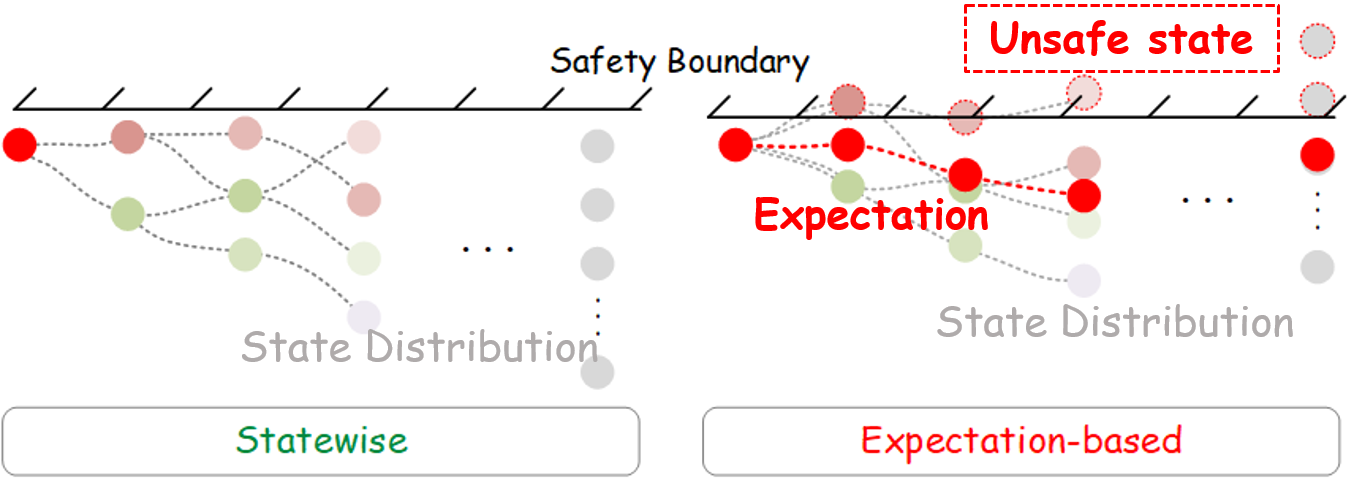

Haitong Ma, Chanlgiu Liu, Shengbo Eben Li, Sifa Zheng, Wenchao Sun, Jianyu Chen arXiv, 2021 Existing constrained RL algorithms utilize posterior penalty signal when the agent encounters danger, resulting in difficulties learning zero-violation policies. |

|

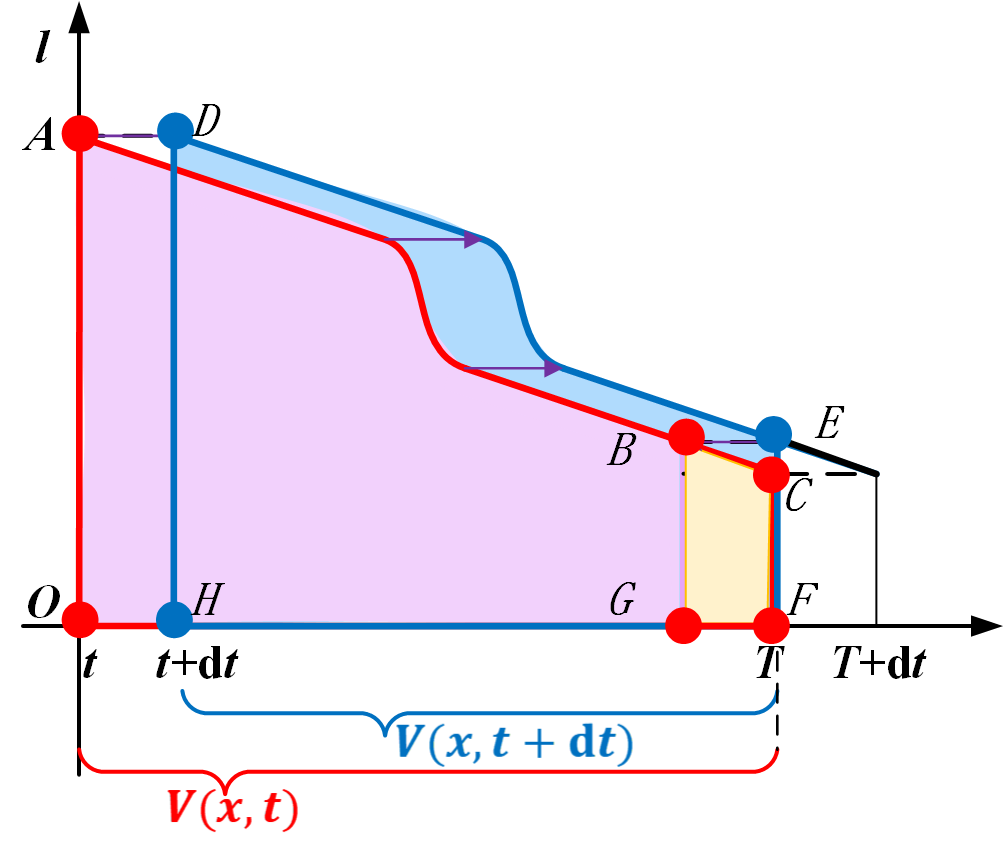

Ziyu Lin*, Jingliang Duan*, Shengbo Eben Li, Haitong Ma, Qi Sun, Jianyu Chen, Bo Cheng International Conference on Computer, Control and Robotics, 2021 (Best Presentation Award) Approximate dynamic programming for finite-horizon continuous-time optimal control problems. |

|

Special credit to Jon Barron for the source code of this website. |